Scaling a Node.js Microservices App with React.js Frontend to Handle 5 Million Requests Per Second

Introduction:

Imagine we've built a sophisticated web application using microservices architecture with Node.js for backend services and React.js for the frontend. Now, let's discuss how we can scale this application to handle an enormous load of 5 million requests per second (RPS).

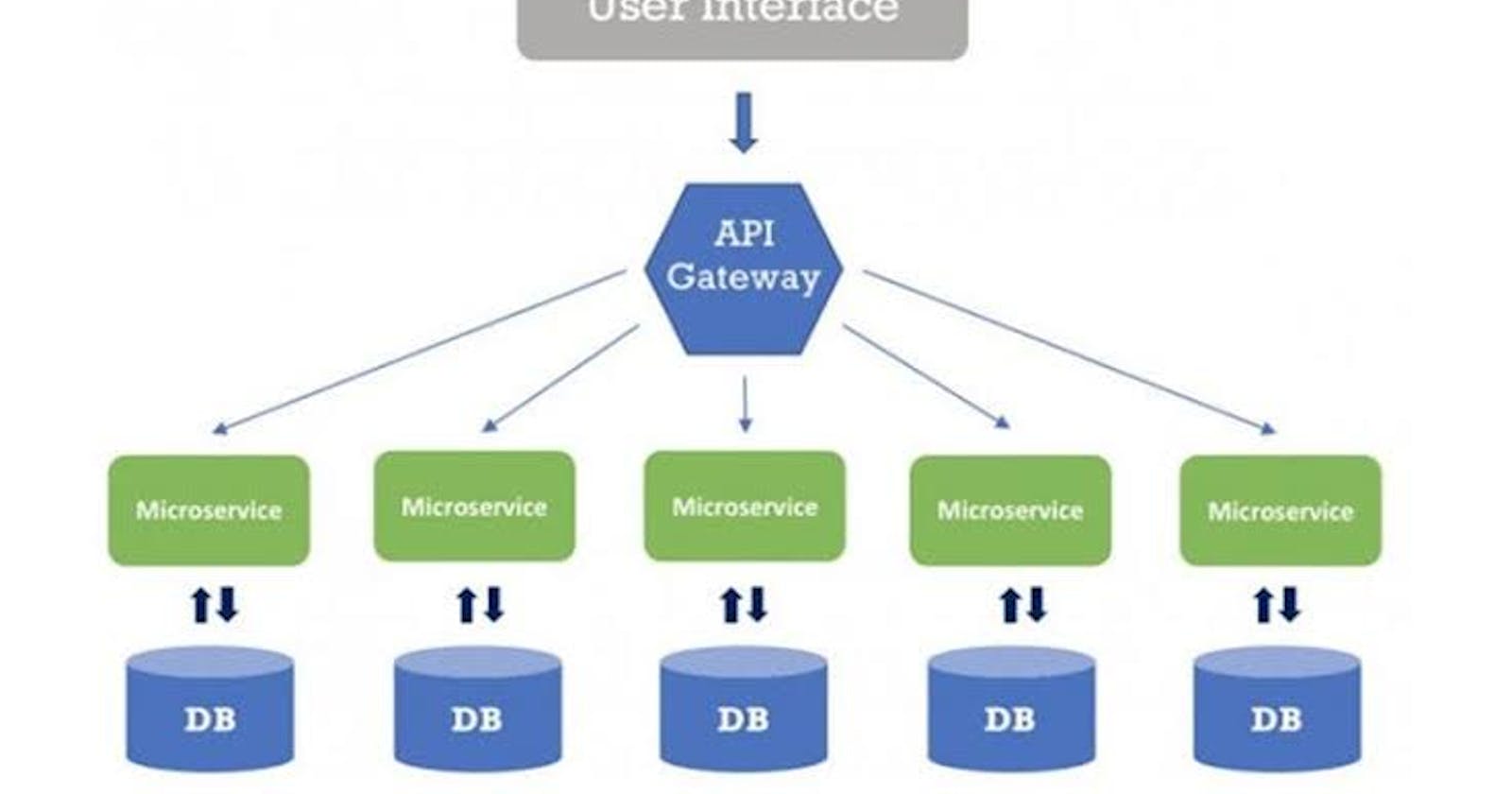

1. Microservices Architecture:

Imagine our web application is divided into separate services, each responsible for a specific function like user authentication, data processing, or content delivery. For instance:

User Service: Manages user registration, authentication, and profile management.

Content Service: Handles content creation, storage, and retrieval.

Analytics Service: Processes user activity data for analytics and insights.

2. Horizontal Scaling:

Now, envision each microservice running as a containerized application. We use Docker to encapsulate each service and ensure consistency across different environments. These containers are orchestrated and scaled horizontally using AWS Elastic Container Service (ECS) or Elastic Kubernetes Service (EKS).

3. Auto Scaling:

We configure Auto Scaling groups for each microservice, specifying scaling policies based on metrics like CPU utilization or request count. For example, if CPU utilization exceeds a certain threshold, Auto Scaling automatically adds more instances to handle the increased load. Conversely, it removes instances during periods of low demand to optimize costs.

4. Load Balancing:

Imagine an Application Load Balancer (ALB) sitting in front of our microservices, distributing incoming traffic across multiple instances to ensure even workload distribution and high availability. The ALB periodically checks the health of each instance and routes traffic only to healthy instances.

5. Caching and Optimizations:

To improve performance and reduce database load, we implement caching using Amazon ElastiCache (Redis). Imagine frequently accessed data such as user sessions or frequently requested content being stored in memory for faster access. This reduces the need to fetch data from the database, resulting in lower latency and improved scalability.

6. React.js Frontend Optimization:

On the frontend side, we optimize our React.js components for performance. We utilize techniques like code splitting to divide our application into smaller chunks and lazy loading to load components only when needed. This reduces the initial load time and improves the perceived performance for users.

7. Content Delivery Network (CDN):

We leverage a CDN like Amazon CloudFront to cache and deliver static assets (e.g., JavaScript, CSS, images) closer to users. Imagine these assets being distributed across multiple edge locations worldwide, reducing latency and improving overall performance for users regardless of their geographical location.

8. Monitoring and Alerting:

Lastly, we set up CloudWatch metrics and alarms to monitor the health and performance of our infrastructure. Imagine having dashboards displaying real-time metrics such as CPU utilization, memory usage, and request latency. We configure alarms to notify us via email or SMS if any metric breaches a predefined threshold, allowing us to proactively address issues and ensure a seamless user experience.

By following these practical steps and leveraging the capabilities of AWS, we can effectively scale our Node.js microservices app with React.js frontend to handle millions of requests per second, providing a reliable and high-performance experience for users.